需求说明

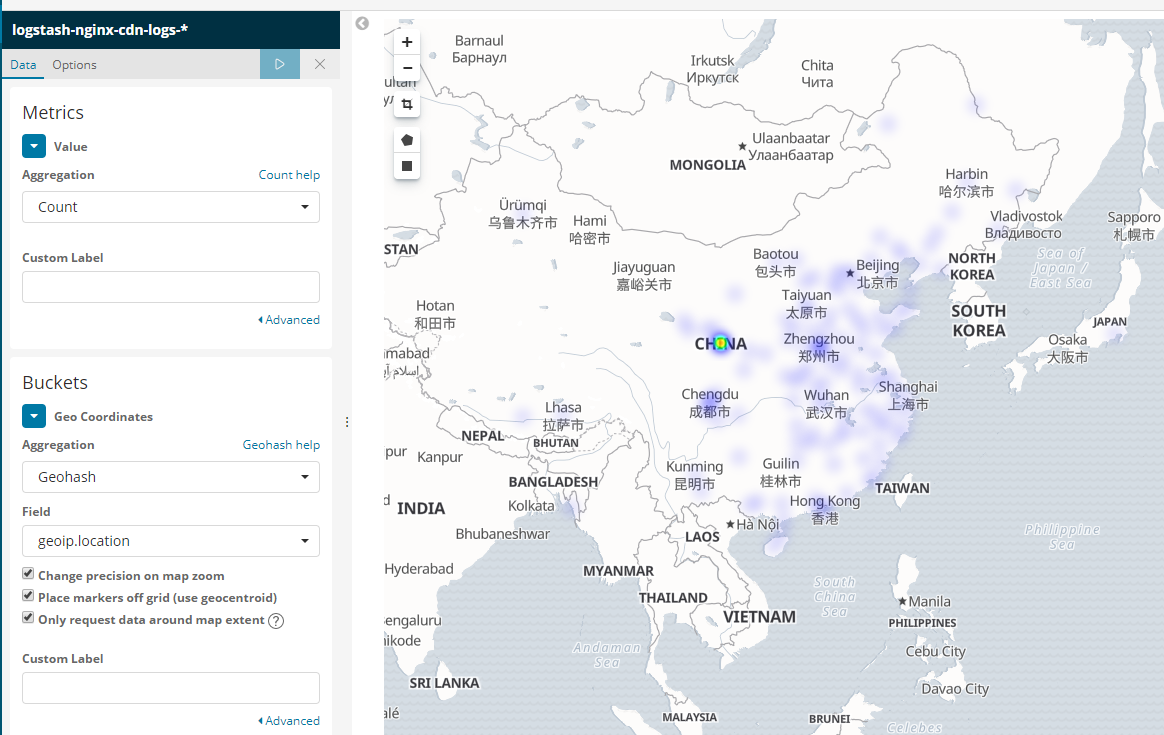

公司运营同事需要查看网站的访问来源分布地图,从而做一些运营决策分析事情。

公司网站使用的阿里云CDN加速,源站的访问访问日志里记录的是CDN节点的地址,这样就没办法使用源站的日志作为依据了;不过阿里云支持通过API导出CDN访问日志,这样只需要将CDN的日志下载到服务器,只要通过ELK日志采集流程,使用 logstash 的 Geoip 插件将IP转换成经纬度,然后通过kibana的地图展示出来就可以了。

实现步骤

1、导出CDN访问日志

1 | #!/usr/bin/env python |

2、配置filebeat采集日志

由filebeat采集日志,统一由logstash写入kafka这样可以防止数据过大导致日志丢失,再由logstash消费并解析为经纬度后存储到ES

filebeat版本是5.5.3

1 | filebeat.prospectors: |

3、logstash写入kafka

1 | output { |

4、logstash Geoip 插件解析经纬度

1 | input { |

这里使用 remove_field 删除日志中不需要的数据,这样存储到ES中时数据会小很多

5、kibana绘图

点击kibana左的Visualize可视化功能,选择 Maps 下的 Corrdinate Map 视图

调整好参数后,大概就是这个样子