ES日志索引配置

1.日常服务索引配置:

- elasticsearch版本6.5.3

- logstash版本6.5.3

- filebeat版本5.5.3

- kafka版本2.11-0.10.1.1

- zookeeper版本3.4.6

- kibana查询索引为:logstash-flume-fusion-*

1.1 日志消费方配置-logstash读kafka写ES

1 | cat logstash-6.5.3-es/conf.d/logstash-flume-fusion |

1.2 日志生产方配置-logstash接收filebeat日志转写kafka

1 | cat logstash-6.5.3-kafka/conf.d/logstash-flume-fusion |

1.3 filebeat 配置

1 | cat filebeat/filebeat.yml |

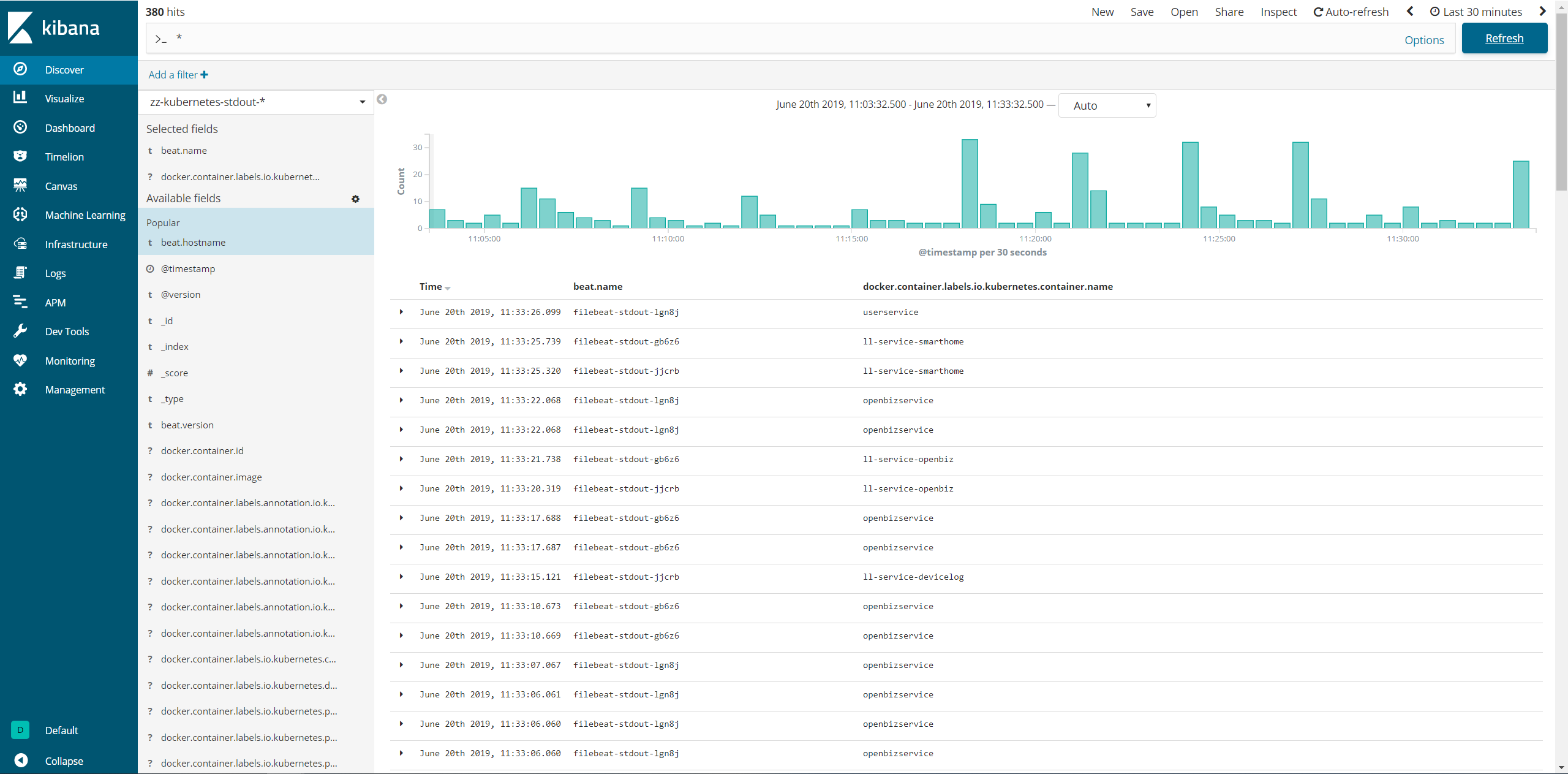

2.kubernetes 容器标准输出日志采集

- filebeat版本6.2.4

- kibana查询索引为:zz-kubernetes-stdout-*

2.1 日志消费方配置-logstash读kafka写ES

1 | cat logstash-6.5.3-es/conf.d/zz-kubernetes-stdout.conf |

2.2 日志生产方配置-logstash接收filebeat日志转写kafka

1 | cat logstash-6.5.3-kafka/conf.d/zz-kubernetes-stdout.conf |

2.3 filebeat 配置 6.0以上版本支持对容器日志的解析

1 | cat filebeat/filebeat.yml |

这里我用了multiline对容器采集的日志进行多行合并,接着再用exclude_lines排除掉不需要采集的容器filebeat-service的标准输出日志,但这并不是绝对的,它只会在log项里过滤该字段,

2.4 扩展:使用k8s DaemonSet方式运行filebeat来收集日志

1 | cat filebeat-stdout.yaml |

最后献上一张收集后的效果

3.常用的一些操作

查看集群状态

1 | curl 127.0.0.1:9200/_cluster/health?pretty |

ES删除日志

1 | curl -XDELETE localhost:9200/logstash-xy-msp-general-2019.03.11 |

查看每个索引的状态

1 | curl -XGET "http://localhost:9200/_cat/indices?v" |

删除所有索引

1 | curl -XDELETE -u elastic:changeme gdg-dev:9200/_all |

删除指定索引

1 | curl -XDELETE -u elastic:changeme gdg-dev:9200/test-2019.01.08 |

删除自定义模板

1 | curl -XDELETE master:9200/_template/temp* |

查看template_1 模板

1 | curl -XGET master:9200/_template/template_1 |

获取指定索引详细信息

1 | curl -XGET 'master:9200/system-syslog-2018.12?pretty' |

获取所有索引详细信息

1 | curl -XGET master:9200/_mapping?pretty |

查看索引副本数

1 | curl -XGET localhost:9200/_cat/indices?v |

修改索引副本数

1 | curl -XPUT 'http://localhost:9200/_template/logstash-job-admin-*' -H 'Content-Type: application/json' -d'{ |

查看kafka里没有该type的消息

1 | ./bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic logstash-ms-service-context --from-beginning |