问题描述

今天添加索引时发现kibana添加索引不生效,页面也没有报错,没有创建成功只是一闪而过。

另外发现各项目日志与当前时间差异很大,filebeat一直报错io timeout

具体报错如下

filebeat无法给logstash传输数。

ip使用x代替

1 | logstash/async.go:235 Failed to publish events caused by: read tcp 172.17.x.x:39092->172.17.x.x:5044: i/o timeout |

logstash报错如下,logstash无法给es传输数据,es一直在拒绝所有的请求

1 | [INFO ][logstash.outputs.elasticsearch] Retrying individual bulk actions that failed or were rejected by the previous bulk request. {:count=>1} |

报错索引只读

1 | index read-only / allow delete (api)];"} |

es报错,es报错也是索引只读错误

1 | [2018-08-23T17:30:35,546][WARN ][o.e.x.m.e.l.LocalExporter] unexpected error while indexing monitoring document |

解决方法

解决办法1

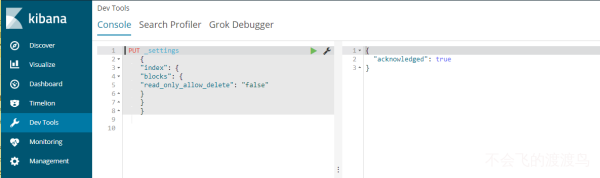

在kibana开发控制台执行下面语句即可

1 | PUT _settings |

解决方法2

如果kibana无法执行命令,可以使用下面命令解决

1 | curl -XPUT -H "Content-Type: application/json" http://localhost:9200/_all/_settings -d '{"index.blocks.read_only_allow_delete": null}' |

一旦在存储超过95%的磁盘中的节点上分配了一个或多个分片的任何索引,该索引将被强制进入只读模式